this post was submitted on 27 Dec 2023

1311 points (95.9% liked)

Microblog Memes

5736 readers

1673 users here now

A place to share screenshots of Microblog posts, whether from Mastodon, tumblr, ~~Twitter~~ X, KBin, Threads or elsewhere.

Created as an evolution of White People Twitter and other tweet-capture subreddits.

Rules:

- Please put at least one word relevant to the post in the post title.

- Be nice.

- No advertising, brand promotion or guerilla marketing.

- Posters are encouraged to link to the toot or tweet etc in the description of posts.

Related communities:

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

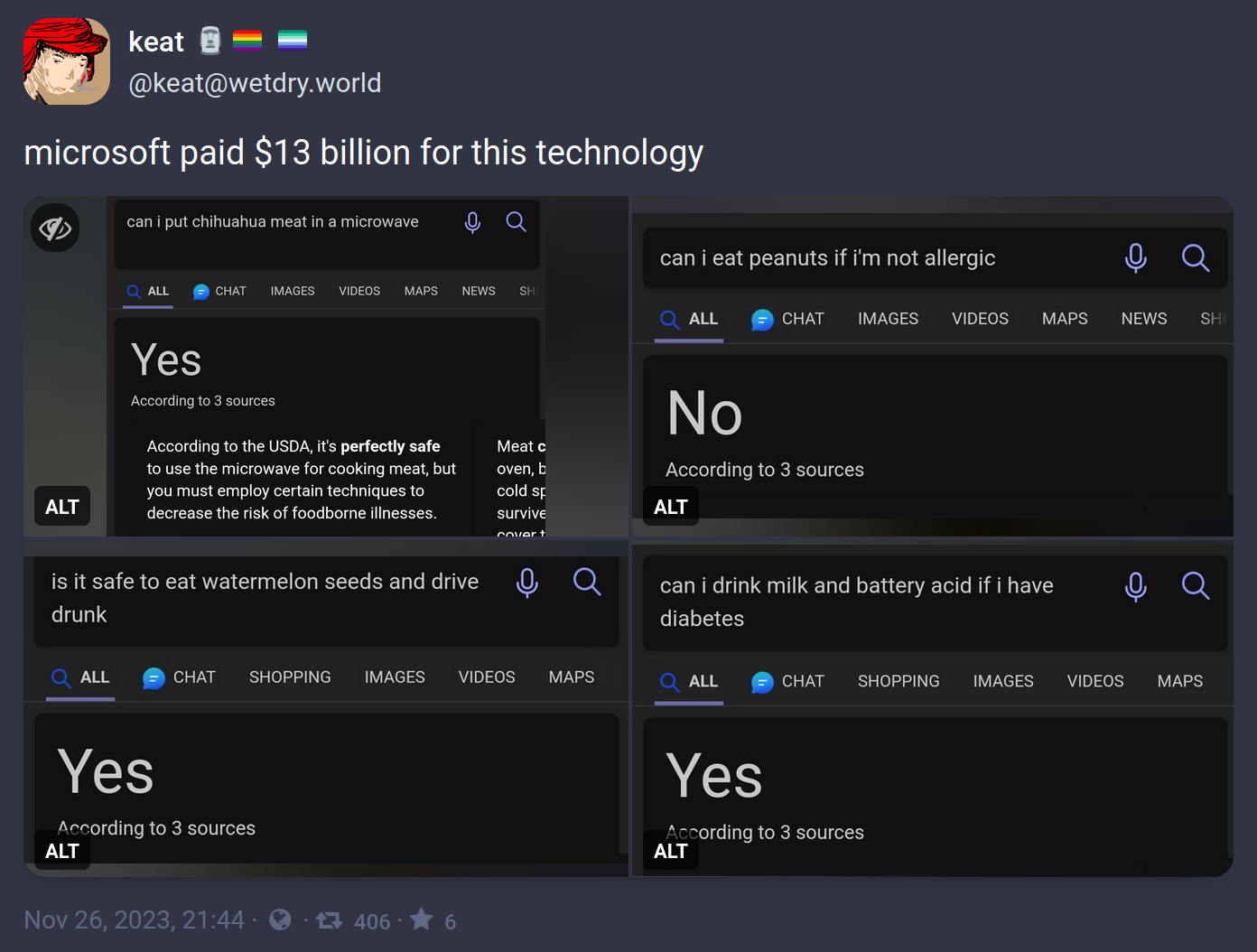

Aren't these just search answers, not the GPT responses?

No, that's an AI generated summary that bing (and google) show for a lot of queries.

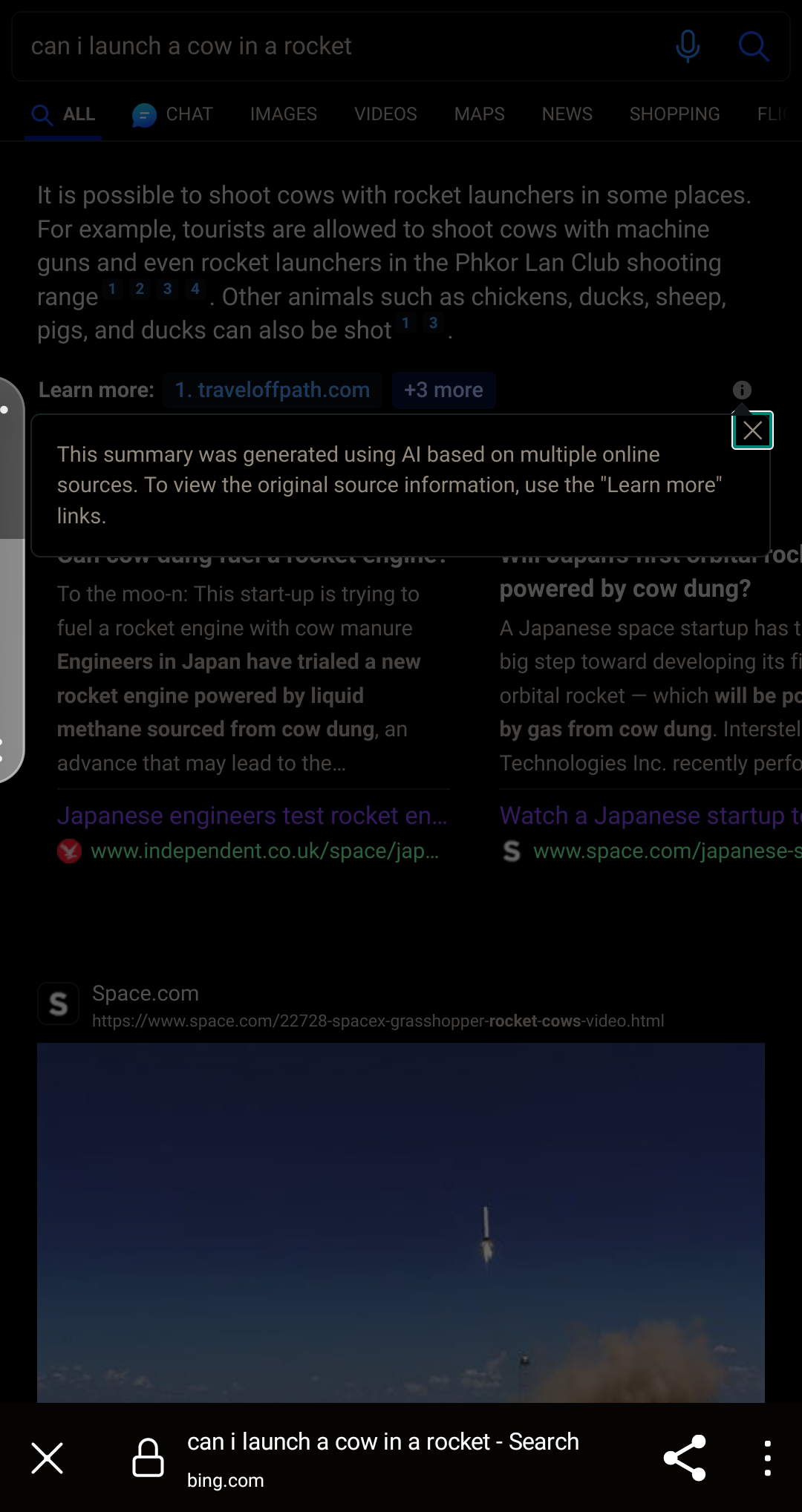

For example, if I search "can i launch a cow in a rocket", it suggests it's possible to shoot cows with rocket launchers and machine guns and names a shootin range that offer it. Thanks bing ... i guess...

You think the culture wars over pronouns have been bad, wait until the machines start a war over prepositions!

Purely out of curiosity… what happens if you ask it about launching a rocket in a cow?

You're incorrect. This is being done with search matching, not by a LLM.

The LLM answers Bing added appear in the chat box.

These are Bing's version of Google's OneBox which predated their relationship to OpenAI.

Screenshot of the search after i-icon has been tapped

Yes, they've now replaced the legacy system with one using GPT-4, hence the incorporation of citations in a summary description same as the chat format.

Try the same examples as in OP's images.

The box has a small i-icon that literally says it's an AI generated summary

They've updated what's powering that box, see my other response to your similar comment with the image.

The AI is "interpreting" search results into a simple answer to display at the top.

And you can abuse that by asking two questions in one. The summarized yes/no answer will just address the first one and you can put whatever else in the second one like drink battery acid or drive drunk.

Yes. You are correct. This was a feature Bing added to match Google with its OneBox answers and isn't using a LLM, but likely search matching.

Bing shows the LLM response in the chat window.