this post was submitted on 03 Oct 2024

159 points (100.0% liked)

chapotraphouse

13499 readers

907 users here now

Banned? DM Wmill to appeal.

No anti-nautilism posts. See: Eco-fascism Primer

Vaush posts go in the_dunk_tank

Dunk posts in general go in the_dunk_tank, not here

Don't post low-hanging fruit here after it gets removed from the_dunk_tank

founded 3 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

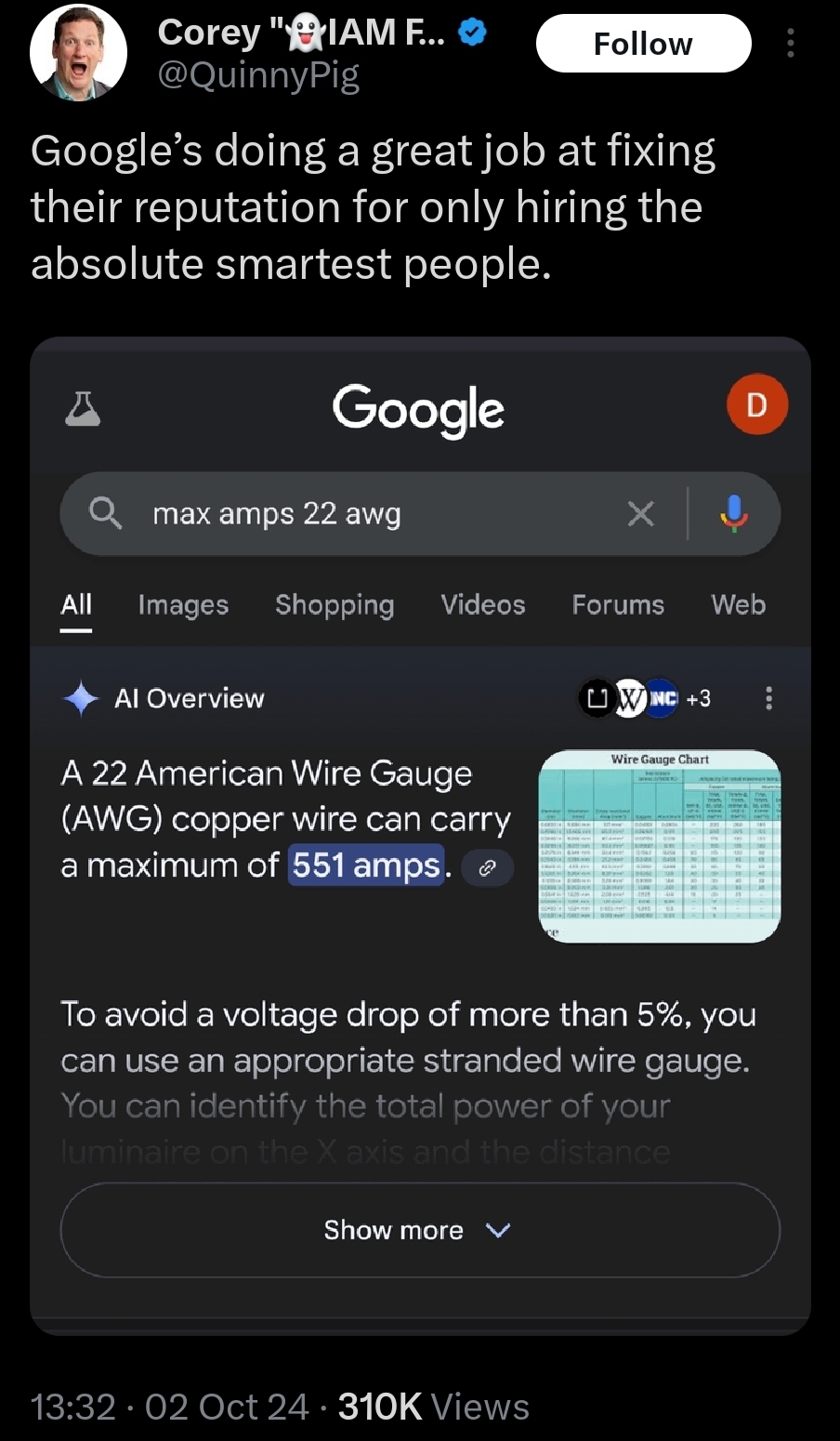

It's not that they hired the wrong people, it's that LLMs struggle with both numbers and factual accuracy. This isn't a personel issue, it's a structural issue with LLMs.

Because LLMs just basically appeared in Google search and it was not any Google employee's decision to implement them despite knowing they're bullshit generators /s

I mean, define employee. I'm sure someone with a Chief title was the one who made the decision. Everyone else gets to do it or find another job.

I mean LLMs are cool to work on and a fun concept. An n dimensional regression where n is the trillions of towns in your dataset is cool. The issue is that it is cool in the same way as a grappling hook or a blockchain.

They're Rube Goldbergian machines of bullshit but the bullshit peddlers (and the glazers) insist that adding more Rube Goldbergian layers to the Rube Goldberg machines will remove the systemic problems with it instead of just hiring people to fact-check. Hatred of human workers is the point, and even when they are used, they're made as invisible as possible, so it's just a Mechanical Turk in that case.

All of this, all that wasted electricity, all that extra carbon dumped into the air, all so credulous rubes can feel like the Singularity(tm) is nigh.

Google gets around 9 billion searches per day. Human fact checking google search quick responses would be an impossible. If each fact check takes 30 seconds, you would need close to 10 million people working full time just to fact check that.

Just let them call in for answers with real people instead. Than it takes more effort and less people will do it when it's not important

Edit: also I'm pretty sure Google could hire 10 million people

Assuming minimum wage at full time, that is 36 billion a year. Google extracts 20 billion in surplus labor per year, so no, Google could not 10 million people.

Are you also suggesting it's impossible for specific times that it really matters, such as medical information?

Maybe having Rube Goldbergian machines that burn the forests and dry the lakes while providing dangerously nonsensical answers is a bad idea in the first place.

Firstly, how do you filter for medical information in a way that works 100% of the time. You are going to miss a lot of medical questions because NLI has countless edge cases. Secondly, you need to make sure your fact checkers are accurate, which is very hard to do. Lastly, you are still getting millions and millions of medical questions per day and you would need tens of thousands of medical fact checkers that need to be perfectly accurate. Having fact checkers will lull people into a false sense of security, which will be very bad when they inevitably get things wrong.

That is a good question, and it goes double for what you're apparently running interference for. How exactly does a sheer volume of bullshit justify bullshit being generated by LLMs in medical fields?

Yes, and the magic of LLMs means those medical questions are going to be missed a lot more often and faster than ever before.

That's an amazing take: the errors aren't as bad as attempts to mitigate the flood of errors from the planet-burning bullshit machines.

If you see a note saying "This was confirmed to be correct by our well-trained human fact checkers" and one saying "[Gemini] can make mistakes. Check important info.", you are more likely to believe the first than the second. The solution here is to look at actual articles with credited authors, not to have an army of people reviewing every single medical query.

I'm still not seeing a safe or even meaningful use case for the treat printers here, especially not for the additional electricity and waste carbon costs. Were medical data queries impossible before LLMs? No, they were not.

LLM usage here doesn't help, that's true. But medical queries weren't good before LLM's either, just because it's an incredibly complex field with many edge cases. There is a reason self diagnosis is dangerous and it isn't because of technology.technology.

Yes, I'm glad we can agree on that.

The rest of what you were saying seems kind of like a pointless derail because you were defending something that's already indefensibly bad for its supposed use case here.