Okay, two separate thoughts here:

- Paul G is so fucking close to getting it, Christ on a bike

- How the fuck do you get burned by someone as soulless as Sam Altman

Okay, two separate thoughts here:

Also there already is a bsky user with the username leoxiv, who makes from what I can tell final fantasy poses, some nsfw. Lol.

Being a bit more specific, its Final Fantasy XIV, which you've probably heard about from people using its free trial as meme material. Its also a better example of the metaverse than any actual metaverse out there, but that's a given for literally any MMO that has popped up for the last twenty fucking years.

Also:

In case you missed it, a couple sneers came out against AI from mainstream news outlets recently - CNN's put out an article titled "Apple’s AI isn’t a letdown. AI is the letdown", whilst the New York Times recently proclaimed "The Tech Fantasy That Powers A.I. Is Running on Fumes".

You want my take on this development, I'm with Ed Zitron on this - this is a sign of an impending sea change. Looks like the bubble's finally nearing its end.

I've already talked about the indirect damage AI's causing to open source in this thread, but this hyper-stretched definition's probably doing some direct damage as well.

Considering that this "Open Source AI" definition is (almost certainly by design) going to openwash the shit out of blatant large-scale theft, I expect it'll heavily tar the public image of open-source, especially when these "Open Source AIs" start getting sued for copyright infringement.

If we're lucky, it'll cut off promptfondlers' supply of silicon and help bring this entire bubble crashing down.

It'll probably also cause major shockwaves for the tech industry at large, but by this point I hold nothing but unfiltered hate for everyone and everything in Silicon Valley, so fuck them.

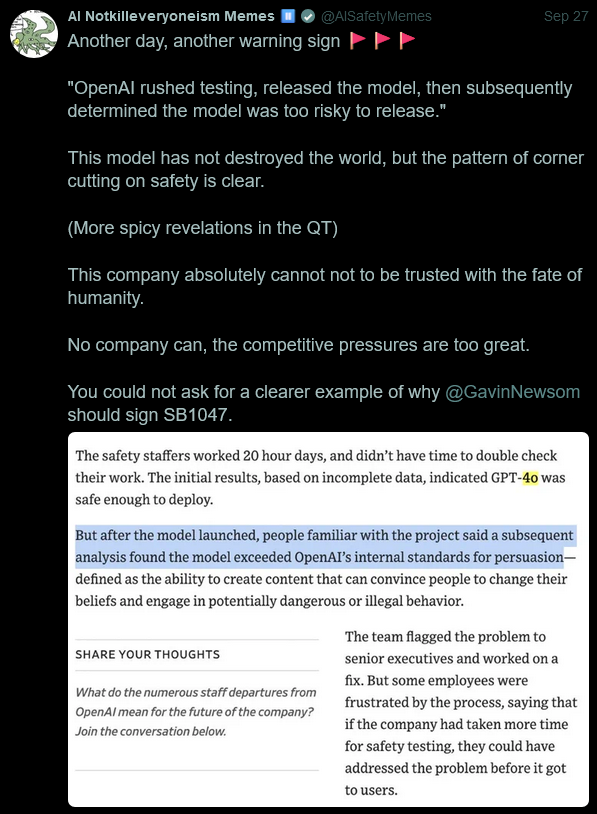

I vaguely remember mentioning this AI doomer before, but I ended up seeing him openly stating his support for SB 1047 whilst quote-tweeting a guy talking about OpenAI's current shitshow:

I've had this take multiple times before, but now I feel pretty convinced the "AI doom/AI safety" criti-hype is going to end up being a major double-edged sword for the AI industry.

The industry's publicly and repeatedly hyped up this idea that they're developing something so advanced/so intelligent that it could potentially cause humanity to get turned into paperclips if something went wrong. Whilst they've succeeded in getting a lot of people to buy this idea, they're now facing the problem that people don't trust them to use their supposedly world-ending tech responsibly.

New piece from The Atlantic: A New Tool to Warp Reality (archive)

Turns out the bullshit firehoses undeservedly called chatbots have some capacity to generate a reality distortion field of sorts around them.

Not a sneer, but a deeply cursed dream from a friend:

It'd be the sole silver lining of this entire two-year bubble.

Not a sneer, but a mildly interesting open letter:

A specification for those who want content searchable on search engines, but not used for machine learning.

The basic idea is effectively an extension of robots.txt which attempts to resolve the issue by providing a means to politely ask AI crawlers not to scrape your stuff.

Personally, I don't expect this to ever get off the ground or see much usage - this proposal is entirely reliant on trusting that AI bros/companies will respect people's wishes and avoid scraping shit without people's permission.

Between OpenAI publicly wiping their asses with robots.txt, Perplexity lying about user agents to steal people's work, and the fact a lot of people's work got stolen before anyone even had the opportunity to say "no", the trust necessary for this shit to see any public use is entirely gone, and likely has been for a while.

Reject search engines, return to webrings/directories

That I can see. Unlike software "engineering", law is a field which has high and exacting standards - and faltering even slightly can lead to immediate and serious consequences.