Discovered new manmade horrors beyond my comprehension today (recommend reading the whole thread, it goes into a lot of depth on this shit):

Discovered new manmade horrors beyond my comprehension today (recommend reading the whole thread, it goes into a lot of depth on this shit):

In other news, Kevin McLeod just received some major backlash for generating AI slop, with the track Kosmose Vaikus (which is described as made using Suno) getting the most outrage.

Ran across a piece from Jan Wildeboer: Botnet Part 2: The Web is Broken, which focuses on the "residential proxy" services which he discovered to be a likely source of the AI slop scrapers that are DDoSing the 'Net.

Ending paragraph is pretty notable IMO, so I'm dropping it here:

I am now of the opinion that every form of web-scraping should be considered abusive behaviour and web servers should block all of them. If you think your web-scraping is acceptable behaviour, you can thank these shady companies and the “AI” hype for moving you to the bad corner.

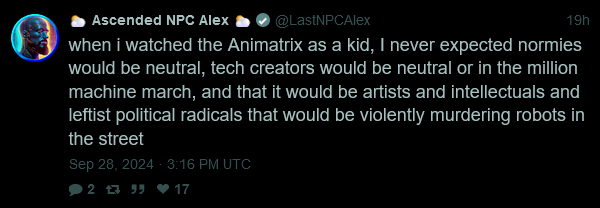

Saw an unexpected Animatrix reference on Twitter today - and from an unrepentant promptfondler, no less:

This ended up starting a lengthy argument with an "AI researcher" (read: promptfondler with delusions of intelligence), which you can read if you wanna torture yourself.

So you're saying that they've got books worth at least a grand which their owners are literally using to flaunt their wealth?

I'm legally obligated to say stealing is legally and morally wrong buuuuuuuut

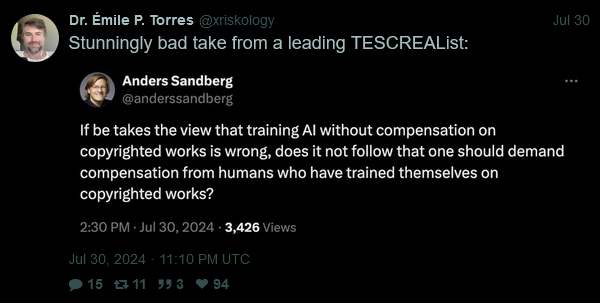

@ai_shame RT'd a good sneer in the wild recently:

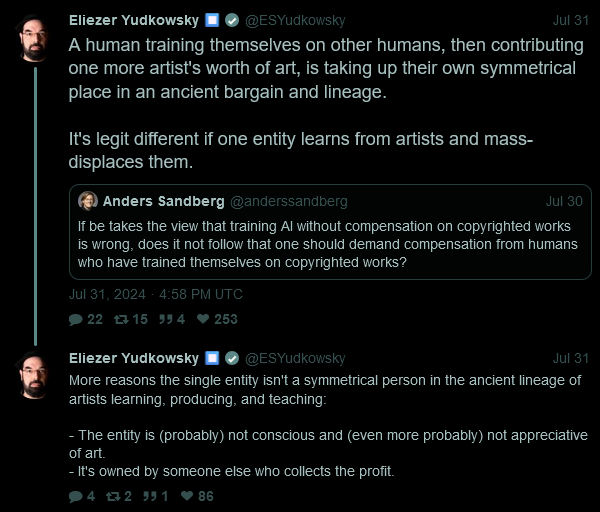

Bonus: Eliezer, of all people, also sneered at the TESCREAL twat:

Honestly, I think we need another winter. All this hype is drowning out any decent research, and so all we are getting are bogus tests and experiments that are irreproducible because they’re so expensive. It’s crazy how unscientific these ‘research’ organizations are. And OpenAI is being paid by Microsoft to basically jerk-off sam Altman. It’s plain shameful.

If an AI winter does happen, I expect it'll be particularly lengthy/severe. Unlike previous AI hype cycles, this particular cycle has come with some serious negative externalities (large-scale copyright infringement, climate change/water consumption, the flood of AI slop, disinformation, etc).

Said externalities have turned the public strongly against AI, to the point where refusing to use it has become a viable marketing strategy.

You want my suspicion, any further AI research will probably be viewed with immediate distrust, at least for a while.

Did YC seriously think because a growth hacker was in charge, you could value a private school like its an overinflated tech company?

Yes. The answer is always "yes".

what the heck EA forum doesn’t have a block feature? That’s just… ew.

You don't need a block feature if you're as insufferable as the average EA /j

This Is Financial Advice would've been a much better video to make that point with - that video was about a financial doomsday cult centered around a dying mall retailer, and doesn't start going into anything particularly heavy until near the end.

I LITERALLY SPECIAL-CASED THIS BASIC FUCKING SHIT TEN FUCKING MONTHS AGO AND I'M FUCKING DOGSHIT AS A PROGRAMMER HOW THE EVER-LOVING FUCK DID THEY COMPLETELY FUCKING FAIL TO SPECIAL-CASE THIS ONE SPECIFIC SITUATION WHAT THE ACTUAL FUCK

(Seriously, this is extremely fucking basic stuff, how the fuck can you be so utterly shallow and creatively sterile to fuck this u- oh, yeah, I forgot OpenAI is full of promptfondlers and Business Idiots like Sam Altman.)