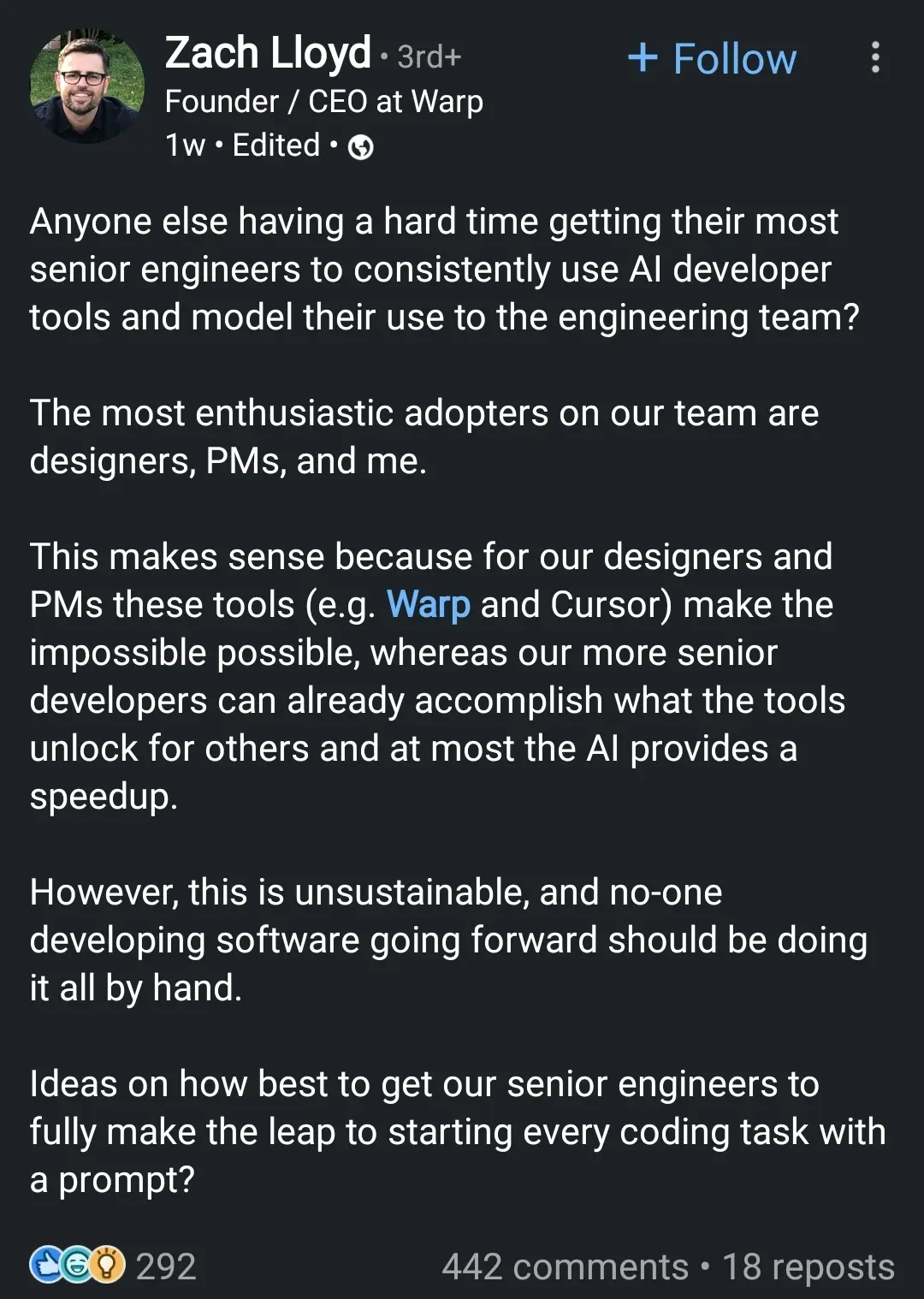

One of the worst things about coding is having to pick apart someone else broken code.

So why the fuck would I want to accelerate my work to THAT point?

You know why designers and PMs like AI code? Because they don't know what the fuck they are doing, they dont have to try and stitch that junk into 15 years of legacy code and they dont have to debug that shit.

"Actually Darkard, I ran this request into GPT and it came back with this? It's only short and most of it has already been done here, so I think your story point estimate is wrong?"

Fuuuuuck oooooooffffffff