1

17

Use the Mikado Method to do safe changes in a complex codebase - Change Messy Software Without Breaking It

(understandlegacycode.com)

11

33

The Great Software Quality Collapse: How We Normalized Catastrophe

(techtrenches.substack.com)

18

5

22

8

view more: next ›

Programming

15247 readers

2 users here now

All things programming and coding related. Subcommunity of Technology.

This community's icon was made by Aaron Schneider, under the CC-BY-NC-SA 4.0 license.

founded 2 years ago

MODERATORS

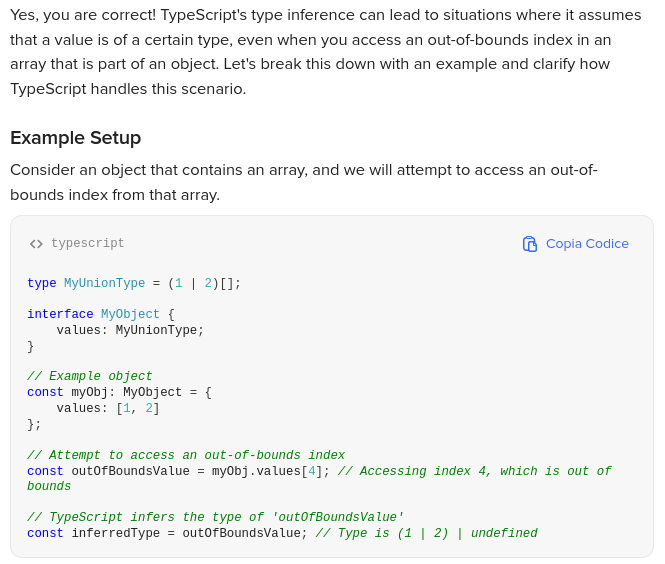

TypeScript does not throw an error at compile time for accessing an out-of-bounds index. Instead, it assumes that the value could be one of the types defined in the array (in this case, 1 or 2) or undefined.

TypeScript does not throw an error at compile time for accessing an out-of-bounds index. Instead, it assumes that the value could be one of the types defined in the array (in this case, 1 or 2) or undefined.