view the rest of the comments

Main, home of the dope ass bear.

THE MAIN RULE: ALL TEXT POSTS MUST CONTAIN "MAIN" OR BE ENTIRELY IMAGES (INLINE OR EMOJI)

(Temporary moratorium on main rule to encourage more posting on main. We reserve the right to arbitrarily enforce it whenever we wish and the right to strike this line and enforce mainposting with zero notification to the users because its funny)

A hexbear.net commainity. Main sure to subscribe to other communities as well. Your feed will become the Lion's Main!

Good comrades mainly sort posts by hot and comments by new!

State-by-state guide on maintaining firearm ownership

State-by-state guide on maintaining firearm ownership

Domain guide on mutual aid and foodbank resources

Domain guide on mutual aid and foodbank resources

Tips for looking at financials of non-profits (How to donate amainly)

Tips for looking at financials of non-profits (How to donate amainly)

Community-sourced megapost on the main media sources to radicalize libs and chuds with

Community-sourced megapost on the main media sources to radicalize libs and chuds with

Main Source for Feminism for Babies

Main Source for Feminism for Babies

Maintaining OpSec / Data Spring Cleaning guide

Maintaining OpSec / Data Spring Cleaning guide

Remain up to date on what time is it in Moscow

Remain up to date on what time is it in Moscow

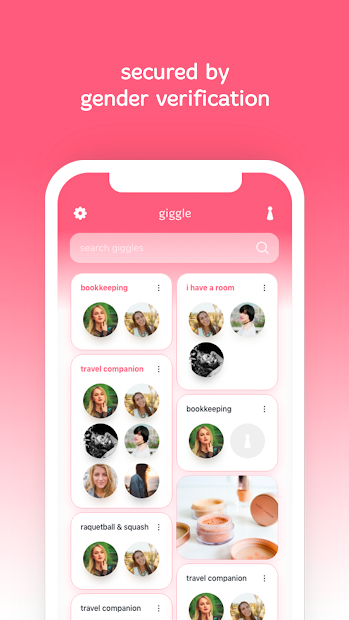

New reminder (after the usage of facial recognition by the police during the protests of course, but also after the more recent twitter cropping thing) that most current AI computer vision software that exists have a racist and sexist bias. Joy Buolamwini and the now famous Timnit Gebru showed in 2018 that systems showing a >99% accuracy for gender classification were actually mostly evaluated on (and developed by?) white men, and that there was a 35 points drop in accuracy when evaluating on a black female dataset. Basically, if you're a black woman, there is a >1/3 chance that AI will classify you as a man.

(They re-evaluated the same software in a later paper showing that, compared to a control group of products that were not in the initial study, the fairness of the systems exposed improved over time. So it seems that even when it's through academic publications, bullying works.)

But with this app the additional problem is that the system misgendering someone will not even be considered as a bug, but precisely as a feature.