Promptfondler (from Old French prompette-fondeleur)

How is the richest man in the world, the future head of a government department with the ear of the president-elect of the United States, such a cringe loser that the most redeeming thing his ex can say about him to protect her pride is that he's kinda good at a couple of videogames?

She's damning him by faint praise so hard she's basically catching strays from her own attempt at defending herself.

"Admit" is a strong word, I'd go for "desperately attempt to deny".

What the hell do people think they're adding to the conversation with quips like this? We were talking about how social media personalities should be better role models. Should parents be good role models? Yes, but that's only relevant to the discussion, if you mean to imply it's not a problem that social media entertainers are bad ones, and that parents being good ones just solves any issues.

I wonder if the OpenAI habit of naming their models after the previous ones' embarrassing failures is meant as an SEO trick. Google "chatgpt strawberry" and the top result is about o1. It may mention the origin of the codename, but ultimately you're still streered to marketing material.

Either way, I'm looking forward to their upcoming AI models Malpractice, Forgery, KiddieSmut, ClassAction, SecuritiesFraud and Lemonparty.

The stretching is just so blatant. People who train neural networks do not write a bunch of tokens and weights. They take a corpus of training data and run a training program to generate the weights. That's why it is the training program and the corpus that should be considered the source form of the program. If either of these can't be made available in a way that allows redistribution of verbatim and modified versions, it can't be open source. Even if I have a powerful server farm and a list of data sources for Llama 3, I can't replicate the model myself without committing copyright infringement (neither could Facebook for that matter, and that's not an entirely separate issue).

There are large collections of freely licensed and public domain media that could theoretically be used to train a model, but that model surely wouldn't be as big as the proprietary ones. In some sense truly open source AI does exist and has for a long time, but that's not the exciting thing OSI is lusting after, is it?

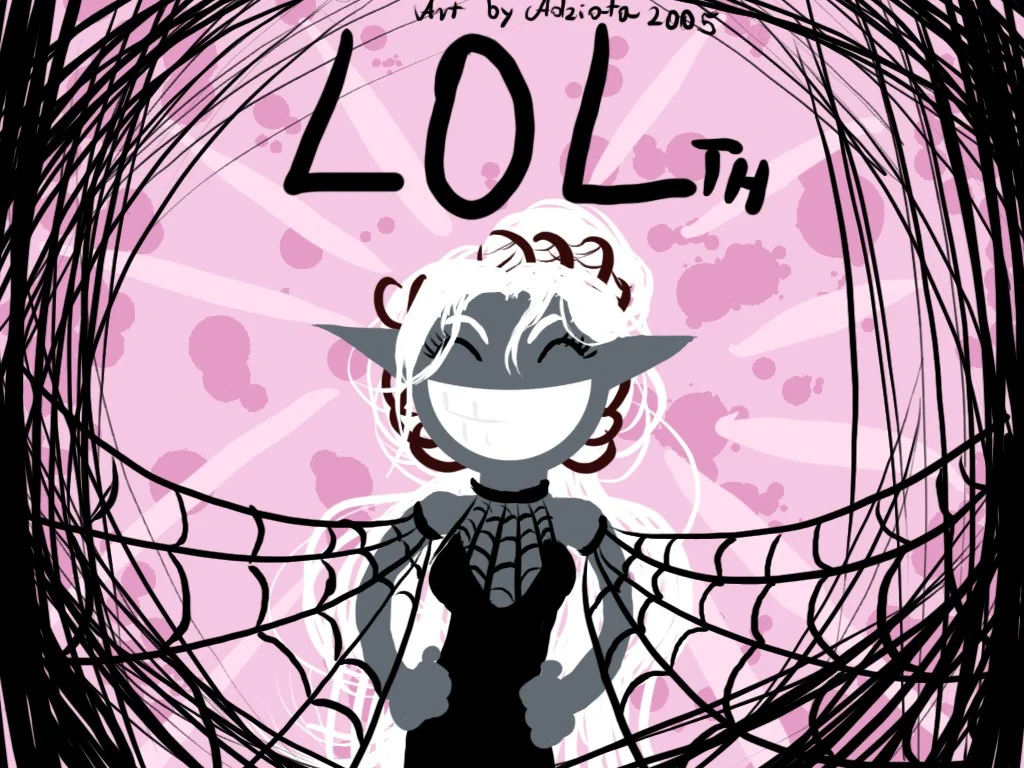

As in a member of a race of edgelords allergic to sunlight? Very progressive of your mistress to let you post on the internet on your own. Is "Nock" the Undercommon word for mom's basement?

TSMC suit: "And is the seven trillion dollars in the room with us right now?"

Uhm, actually the correct term is ~~epheb~~ oligopoly.

Sure, but this isn't about making copyright stricter, but just making it explicit that the existing law applies to AI tech.

I'm very critical of copyright law, but letting specifically big tech pretend like they're not distributing derivative work because it's derived from billions of works on the internet is not the gateway to copyright abolition I'd hope to see.

The phrasing "a bit less racist" suggests a nonzero level of racism in the output, yet the participants also complain about the censorship making the bot refuse to discuss sensitive topics. Sounds like these LLMs can only be boringly racist.

bitofhope

0 post score0 comment score

I appreciate the phrasing "NFTs that cost millions" instead of "NFTs worth millions".