Tried to read it, ended up glazing over after the first or second paragraph, so I'll fire off a hot take and call it a day:

Artificial intelligence is a pseudoscience, and it should be treated as such.

Tried to read it, ended up glazing over after the first or second paragraph, so I'll fire off a hot take and call it a day:

Artificial intelligence is a pseudoscience, and it should be treated as such.

Here's my first shot at it:

"Imagine if the stereotypical high-school nerd became a supervillain."

- following on from 1, it’s kind of funny that the EAs, who you could pattern match to a “high school nerd” stereotype, are intellectually beaten out by an analog of the “jock” stereotype of sports fans: fantasy league participants who understand the concept of “intangibles” that EAs apparently cannot grasp.

On a wider note, it feels the "geek/nerd" moniker's lost a whole lot of cultural cachet since its peak in the mid-'10s. It is a topic Sarah Z has touched on, but I could probably make a full goodpost about it.

the lasting legacy of GenAI will be a elevated background level of crud and untruth, an erosion of trust in media in general, and less free quality stuff being available.

I personally anticipate this will be the lasting legacy of AI as a whole - everything that you mentioned was caused in the alleged pursuit of AGI/Superintelligence^tm^, and gen-AI has been more-or-less the "face" of AI throughout this whole bubble.

I've also got an inkling (which I turned into a lengthy post) that the AI bubble will destroy artificial intelligence as a concept - a lasting legacy of "crud and untruth" as you put it could easily birth a widespread view of AI as inherently incapable of distinguishing truth from lies.

If we're lucky, it'll cut off promptfondlers' supply of silicon and help bring this entire bubble crashing down.

It'll probably also cause major shockwaves for the tech industry at large, but by this point I hold nothing but unfiltered hate for everyone and everything in Silicon Valley, so fuck them.

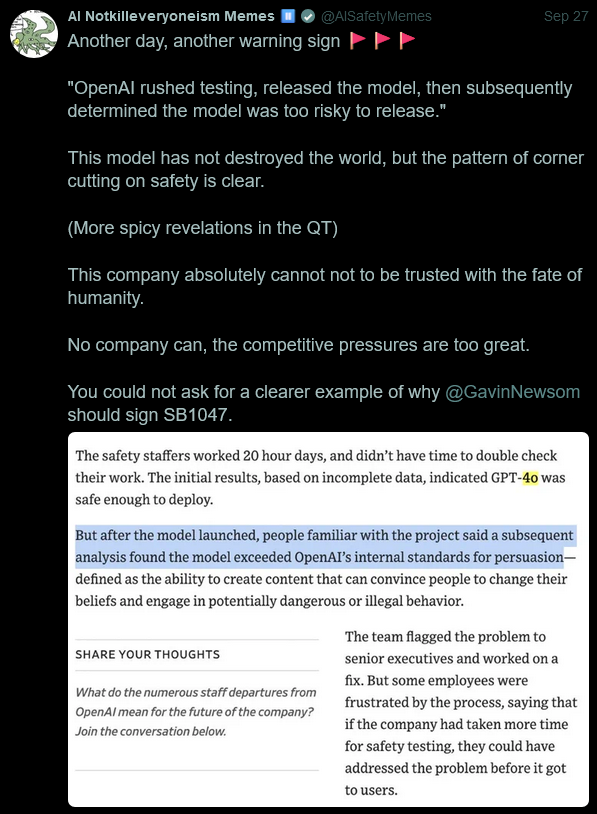

I vaguely remember mentioning this AI doomer before, but I ended up seeing him openly stating his support for SB 1047 whilst quote-tweeting a guy talking about OpenAI's current shitshow:

I've had this take multiple times before, but now I feel pretty convinced the "AI doom/AI safety" criti-hype is going to end up being a major double-edged sword for the AI industry.

The industry's publicly and repeatedly hyped up this idea that they're developing something so advanced/so intelligent that it could potentially cause humanity to get turned into paperclips if something went wrong. Whilst they've succeeded in getting a lot of people to buy this idea, they're now facing the problem that people don't trust them to use their supposedly world-ending tech responsibly.

You know those polls that say fewer than 20% of Americans trust AI scientists?

No, but I'd say its a good sign we're getting close to an AI winter

Not a sneer, but a deeply cursed dream from a friend:

The proposal itself does still assume that AI scrapers are being run by decent human beings with functioning moral compasses, which is why I feel its inadequate.

This take might be overly harsh on AI/tech as a whole, but at this point I've run out of patience regarding this bubble and see no reason to believe anyone in the AI space is a decent human being, at least for the time being.

Not a sneer, but a mildly interesting open letter:

A specification for those who want content searchable on search engines, but not used for machine learning.

The basic idea is effectively an extension of robots.txt which attempts to resolve the issue by providing a means to politely ask AI crawlers not to scrape your stuff.

Personally, I don't expect this to ever get off the ground or see much usage - this proposal is entirely reliant on trusting that AI bros/companies will respect people's wishes and avoid scraping shit without people's permission.

Between OpenAI publicly wiping their asses with robots.txt, Perplexity lying about user agents to steal people's work, and the fact a lot of people's work got stolen before anyone even had the opportunity to say "no", the trust necessary for this shit to see any public use is entirely gone, and likely has been for a while.

That's my hope either - every dollar spent on the technological dead-end of quantum is a dollar not spent on the planet-killing Torment Nexus of AI.