Engineering/Adoptive: Adds eval tests to flag hallucinations

Oh look another one who secretly solved hallucinations.

Engineering/Adoptive: Adds eval tests to flag hallucinations

Oh look another one who secretly solved hallucinations.

copilot assisted code

The article isn't really about autocompleted code, nobody's coming at you for telling the slop machine to convert a DTO to an html form using reactjs, it's more about prominent CEO claims about their codebases being purely AI generated at rates up to 30% and how swengs might be obsolete by next tuesday after dinner.

It's complicated.

It's basically a forum created to venerate the works and ideas of that guy who in the first wave of LLM hype had an editorial published in TIME where he called for a worldwide moratorium on AI research and GPU sales to be enforced with unilateral airstrikes, and whose core audience got there by being groomed by one the most obnoxious Harry Potter fanfictions ever written, by said guy.

Their function these days tends to be to provide an ideological backbone of bad scifi justifications to deregulation and the billionaire takeover of the state, which among other things has made them hugely influential in the AI space.

They are also communicating vessels with Effective Altruism.

If this piques your interest check the links on the sidecard.

The company was named after the U+1F917 🤗 HUGGING FACE emoji.

HF is more of a platform for publishing this sort of thing, as well as the neural networks themselves and a specialized cloud service to train and deploy them, I think. They are not primarily a tool vendor, and they were around well before the LLM hype cycle.

If we came across very mentally disabled people or extremely early babies (perhaps in a world where we could extract fetuses from the womb after just a few weeks) that could feel pain but only had cognition as complex as shrimp, it would be bad if they were burned with a hot iron, so that they cried out. It’s not just because they’d be smart later, as their hurting would still be bad if the babies were terminally ill so that they wouldn’t be smart later, or, in the case of the cognitively enfeebled who’d be permanently mentally stunted.

wat

This almost reads like an attempt at a reductio ad absurdum of worrying about animal welfare, like you are supposed to be a ridiculous hypocrite if you think factory farming is fucked yet are indifferent to the cumulative suffering caused to termites every time an exterminator sprays your house so it doesn't crumble.

Relying on the mean estimate, giving a dollar to the shrimp welfare project prevents, on average, as much pain as preventing 285 humans from painfully dying by freezing to death and suffocating. This would make three human deaths painless per penny, when otherwise the people would have slowly frozen and suffocated to death.

Dog, you've lost the plot.

FWIW a charity providing the means to stun shrimp before death by freezing as is the case here isn't indefensible, but the way it's framed as some sort of an ethical slam dunk even compared to say donating to refugee care just makes it too obvious you'd be giving money to people who are weird in a bad way.

Apparently it implements chain-of-thought, which either means they changed the RHFL dataset to force it to explain its 'reasoning' when answering or to do self questioning loops, or that it reprompts itsefl multiple times behind the scenes according to some heuristic until it synthesize a best result, it's not really clear.

Can't wait to waste five pools of drinkable water to be told to use C# features that don't exist, but at least it got like 25.2452323760909304593095% better at solving math olympiads as long as you allow it a few tens of tries for each question.

The interminable length has got to have started out as a gullibility filter before ending up as an unspoken imperative to be taken seriously in those circles, isn't HPATMOR like a million billion chapters as well?

Siskind for sure keeps his wildest quiet-part-out-loud takes until the last possible minute of his posts, when he does decide to surface them.

Alexandros Marinos, whom I read as engaged-with-but-skeptical-of the “Rationalist” community, says:

Seeing as Marinos' whole beef with Siskind was about the latter's dismissal of invermectin as a potent anti-covid concoction, I would hesitate to cite him as an authority on research standards.

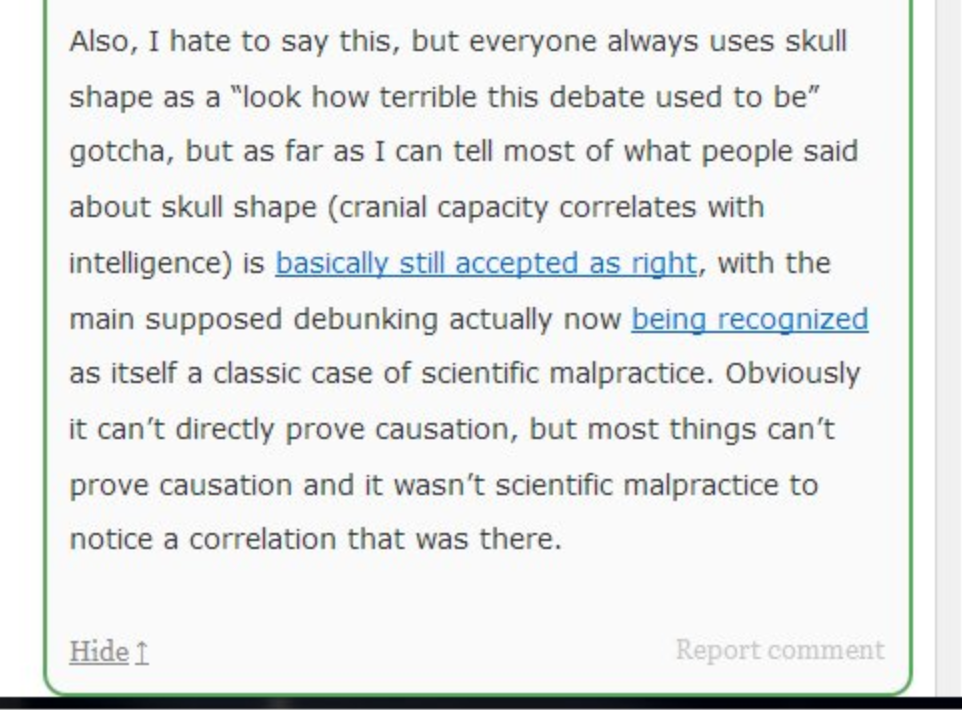

Article worth it just for mentioning Scotty hilariously attempting to whitewash phrenology for counter-culture clout, found in another account that seems to be deep in a covid conspiracy rabbit hole at the moment.

Did the Aella moratorium from r/sneerclub carry over here?

Because if not

for the record, im currently at ~70% that we're all dead in 10-15 years from AI. i've stopped saving for retirement, and have increased my spending and the amount of long-term health risks im taking

I really like how he specifies he only does it when with white people, just to dispel any doubt this happens in the context of discussing Lovecraft's cat.

EA Star Wars pitch

image transcription

Zach Weinersmith skeeted: Movie idea:Effective Altruism Star Wars, in which it's OK to be a Sith as long as the majority of your earnings go through vetted charities.

Plod skeeted: And when the Death Star explodes it's due to fraudulant accounting

tiedoton skeeted: Building endless swaths of droids that do nothing but continuously experience bliss to offset any suffering caused by the Empire.

Not sure if that would be done by the Empire or the Rebellion