Conversely, people who may not look or sound like a traditional expert, but are good at making predictions

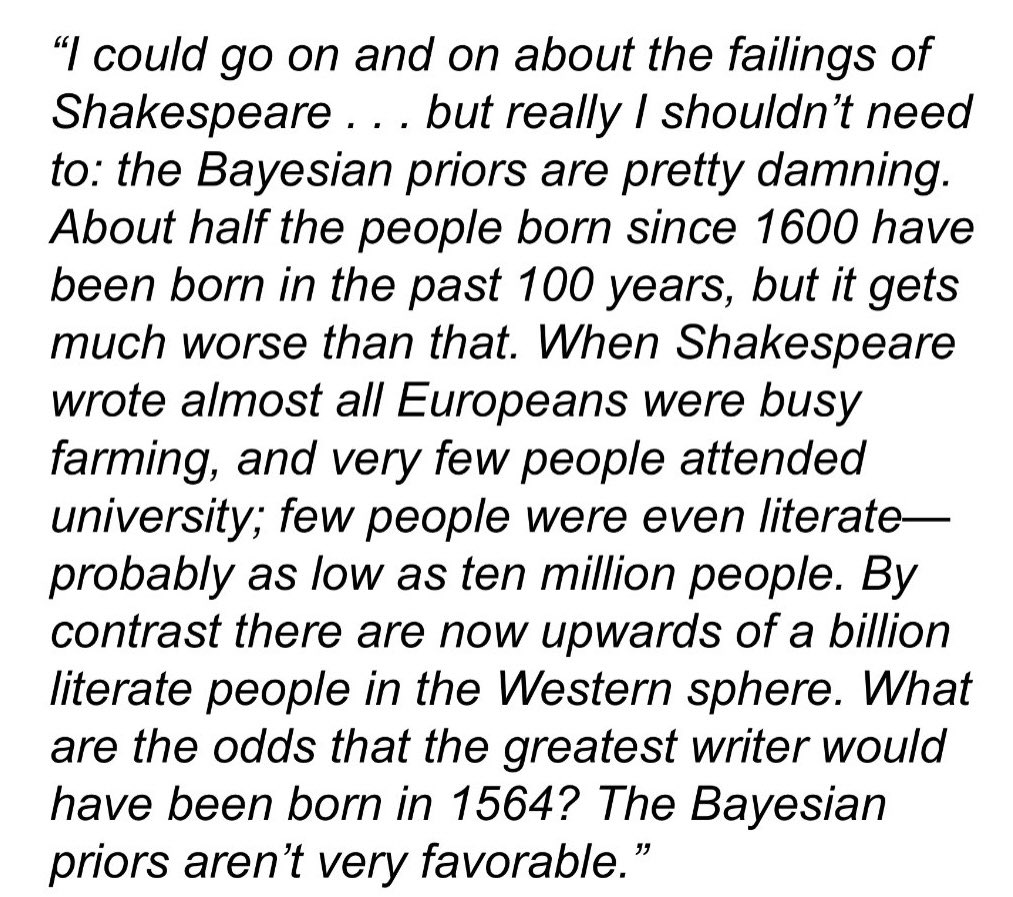

The weird rationalist assumption that being good at predictions is a standalone skill that some people are just gifted with (see also the emphasis on superpredictors being a thing in itself that's just clamoring to come out of the woodwork but for the lack of sufficient monetary incentive) tends to come off a lot like if an important part of the prediction market project was for rationalists to isolate the muad'dib gene.

To get a bit meta for a minute, you don't really need to.

The first time a substantial contribution to a serious issue in an important FOSS project is made by an LLM with no conditionals, the pr people of the company that trained it are going to make absolutely sure everyone and their fairy godmother knows about it.

Until then it's probably ok to treat claims that chatbots can handle a significant bulk of non-boilerplate coding tasks in enterprise projects by themselves the same as claims of haunted houses; you don't really need to debunk every separate witness testimony, it's self evident that a world where there is an afterlife that also freely intertwines with daily reality would be notably and extensively different to the one we are currently living in.