I've been thinking about setting up Anubis to protect my blog from AI scrapers, but I'm not clear on whether this would also block search engines. It would, wouldn't it?

Was it "social media" or was it specific tech companies trading rage for clicks? I find it hard to believe that Mastodon & Lemmy would be comparable to X & Facebook in this area.

I had the same reaction until I read this.

TL;DR: it's 10-50x more efficient at cleaning the air and actually generates both electricity and fertiliser.

Yes, it would be better to just get rid of all the cars generating the pollution in the first place and putting in some more trees, but there are clear advantages to this.

I have zero interest in anything Microsoft has to say about Free software.

Oof, that video... I don't have enough patience to put up with that sort of thing either. I wonder how plausible a complete Rust fork of the kernel would be.

Honestly, after having served on a Very Large Project with Mypy everywhere, I can categorically say that I hate it. Types are great, type checking is great, but applying it to a language designed without types in mind is a recipe for pain.

This is the path to enshitification.

Public services aren't meant to be profitable. They're meant to provide a service that serves the community.

It's funny, before this, I was just going to buy a legit copy and play it on my Deck (I have a Switch, but prefer the Deck)

Now, fuck those guys. If I play at all, it'll be on a pirated copy.

Actually, I stepped away from the project 'cause I stopped using it altogether. I started the project to satisfy the British government with their ridiculous requirements for proof of my relationship with my wife so I could live here. Once I was settled though and didn't need to be able to bring up flight itineraries from 5 years ago, it stopped being something I needed.

Well that, and lemme tell you, maintaining a popular Free software project is HARD. Everyone has an idea of where stuff should go, but most of the contributions come in piecemeal, so you're left mostly acting as the one trying to wrangle different styles and architectures into something cohesive... while you're also holding down a day job. It was stressful to say the least, and with a kid on the way, something had to give.

But every once in a while I consider installing paperless-ngx just to see how it's come along, and how much has changed. I'm absolutely delighted that it's been running and growing in my absence, and from the screenshots alone, I see that a lot of the ideas people had when I was helming made it in in the end.

Ha! I wrote it! Well the original anyway. It's been forked a few times since I stepped away.

So yeah, I think it's pretty cool 😆

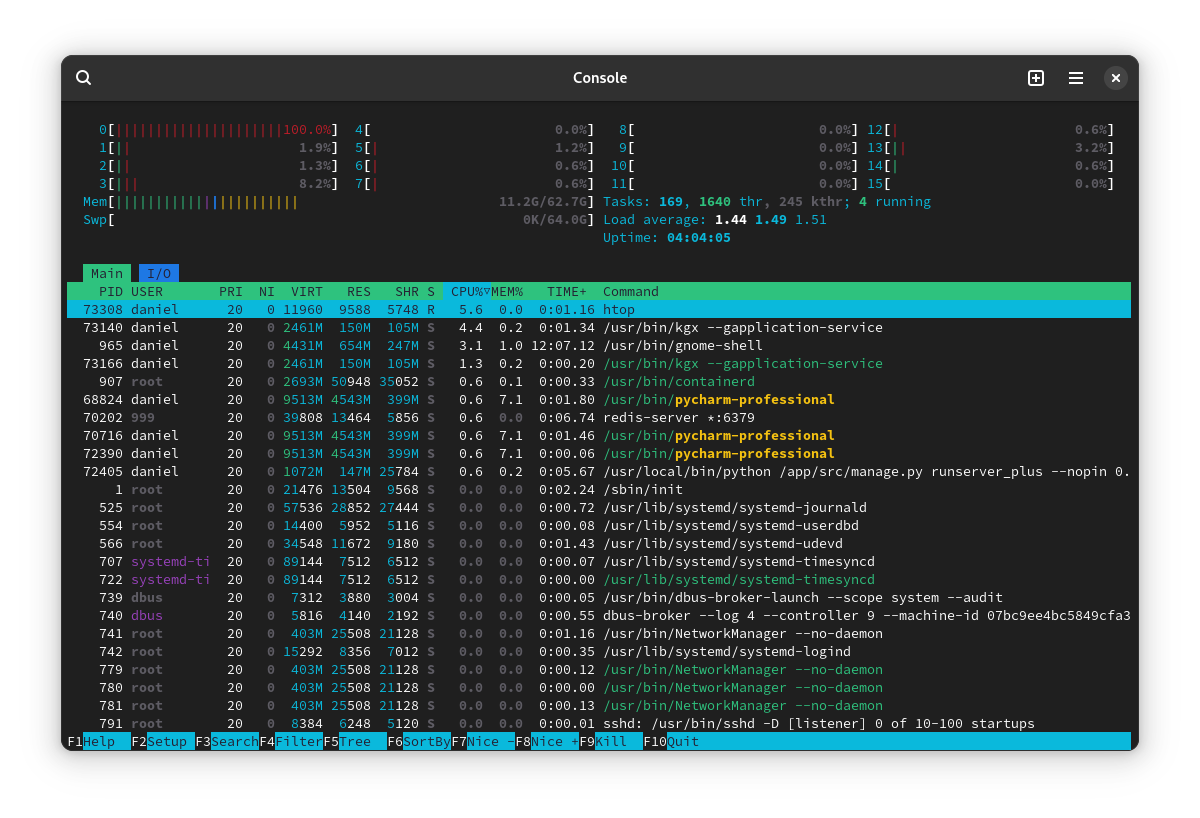

danielquinn

0 post score0 comment score

This all appears to be based on the user agent, so wouldn't that mean that bad-faith scrapers could just declare themselves to be typical search engine user agent?