AI

4116 readers

1 users here now

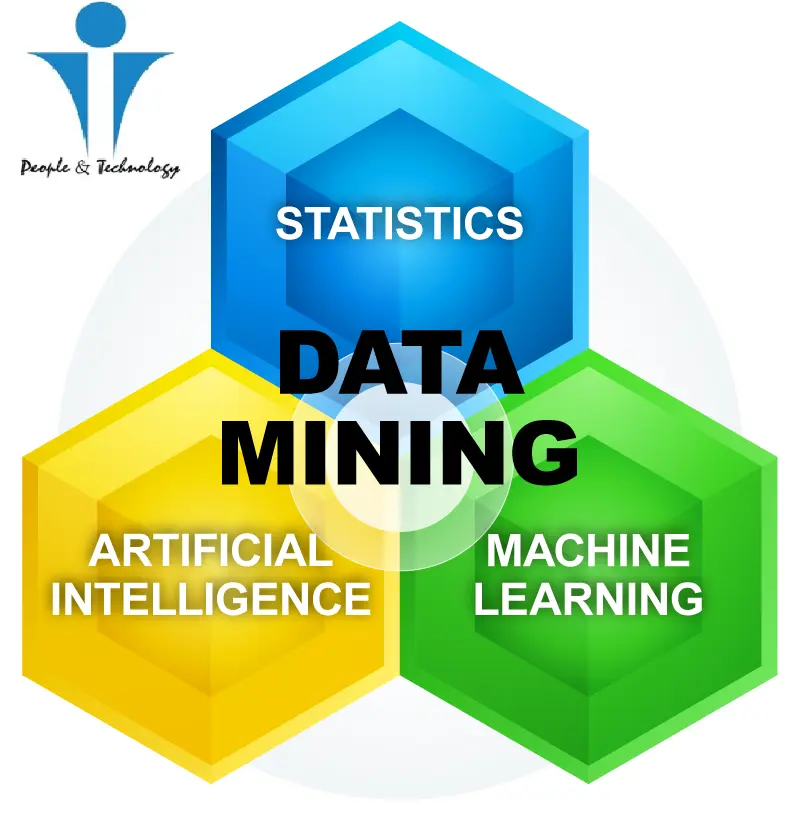

Artificial intelligence (AI) is intelligence demonstrated by machines, unlike the natural intelligence displayed by humans and animals, which involves consciousness and emotionality. The distinction between the former and the latter categories is often revealed by the acronym chosen.

founded 3 years ago

1

12

2

3

4

5

6

18

Nobel Prize awarded to ‘godfather of AI’ who warned it could wipe out humanity

(www.independent.co.uk)

7

8

9

10

11

12

13

14

15

16

5

AIs encode language like brains do − opening a window on human conversations.

(theconversation.com)

17

6

Meta just launched the largest ‘open’ AI model in history. Here’s why it matters.

(theconversation.com)

18

19

20

21

22

23

24

25

view more: next ›