this post was submitted on 07 Dec 2023

195 points (91.1% liked)

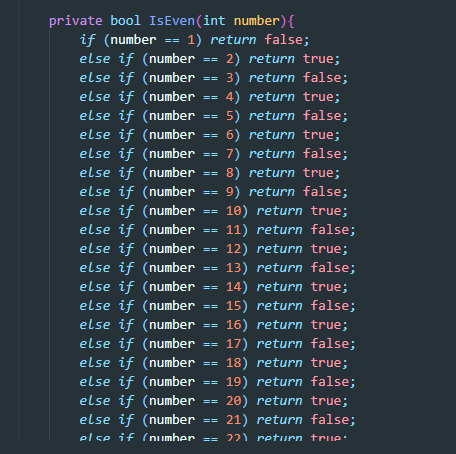

Programming Horror

1877 readers

1 users here now

Welcome to Programming Horror!

This is a place to share strange or terrible code you come across.

For more general memes about programming there's also Programmer Humor.

Looking for mods. If youre interested in moderating the community feel free to dm @[email protected]

Rules

- Keep content in english

- No advertisements (this includes both code in advertisements and advertisement in posts)

- No generated code (a person has to have made it)

Credits

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

You know, I was going to let this slide under the notion that we're just ignoring the limited precision of floating point numbers... But then I thought about it and it's probably not right even if you were computing with real numbers! The decimal representation of real numbers isn't unique, so this could tell me that "2 = 1.9999..." is odd. Maybe your string coercion is guaranteed to return the finite decimal representation, but I think that would be undecidable.

Ackchyually-- IEEE 754 guarantees any integer with absolute value less than 2^24 to be exactly representable as a single precision float. So, the "divide by 2, check for decimals" should be safe as long as the origin of the number being checked is somewhat reasonable.

Of course, but it's somewhat nasty when all of a sudden

is_evendoesn't do what you expect :).I don't think your belief holds water. By definition an even number, once divided by 2, maps to an integer. In binary representations, this is equivalent to a right shift. You do not get a rounding error or decimal parts.

But this is nitpicking a tongue-in-cheek comment.

“1.99999…” is an integer, though! If you’re computing with arbitrary real numbers and serializing it to a string, how do you know to print “2” instead of “1.9999…”? This shouldn’t be decidable, naively if you have a program that prints “1.” and then repeatedly runs a step of an arbitrary Turing machine and then prints “9” if it did not terminate and stops printing otherwise, determining if the number being printed would be equal to 2 would solve the halting problem.

Arbitrary precision real numbers are not represented by finite binary integers. Also a right shift on a normal binary integer cannot tell you if the number is even. A right shift is only division by 2 on even numbers, otherwise it’s division by 2 rounded down to the nearest integer. But if you have a binary integer and you want to know if it’s even you can just check the least significant bit.