I can't tell if AI is going to become the biggest leap forward in technology that we've ever seen. Or if it's all just one giant fucking bubble. Similar to the crypto craze. It's really hard to tell and I could see it going either way.

There is plenty of AI that is already in use (for example in medical diagnostics and engeneering) so we can safely say that it isn't "one giant bubble" - it might be overhyped when it comes to certain aspects like the ability to write a coherent and creative book that would have success on the market.

It can certainly help to write a book, though. So even if we aren't close to being able to just tell ChatGPT-n "write a satisfying conclusion to the Song of Ice and Fire series for me please" it's still going to shake things up quite a bit.

People seem to think that ChatGPT is way more useful than it actualy is. It's very odd.

People often take works of fiction to be akin to documentaries, so they may be expecting science fiction style AI.

The Internet was a massive success, however 95% of web companies went bankrupt during the dot com bubble. Just because something is useful doesnt' mean it can't be massively overhyped and a bubble............

I have no disagreement about that. I only wanted to convey that not 100% of it is a bubble.

It's definitely both unfortunately and I don't think journalists even sort of have the capacity to recognize that.

For my programming needs, I seem to notice it takes wild guesses from 3rd party libraries that are private and assumes it could be used in my code. Head scratching results.

It will just make up 3rd party libraries. This seems to happen more often the less common the programming language is, like it will make up a library in that language that has the same name as actual library in Python.

I have seen that as well.

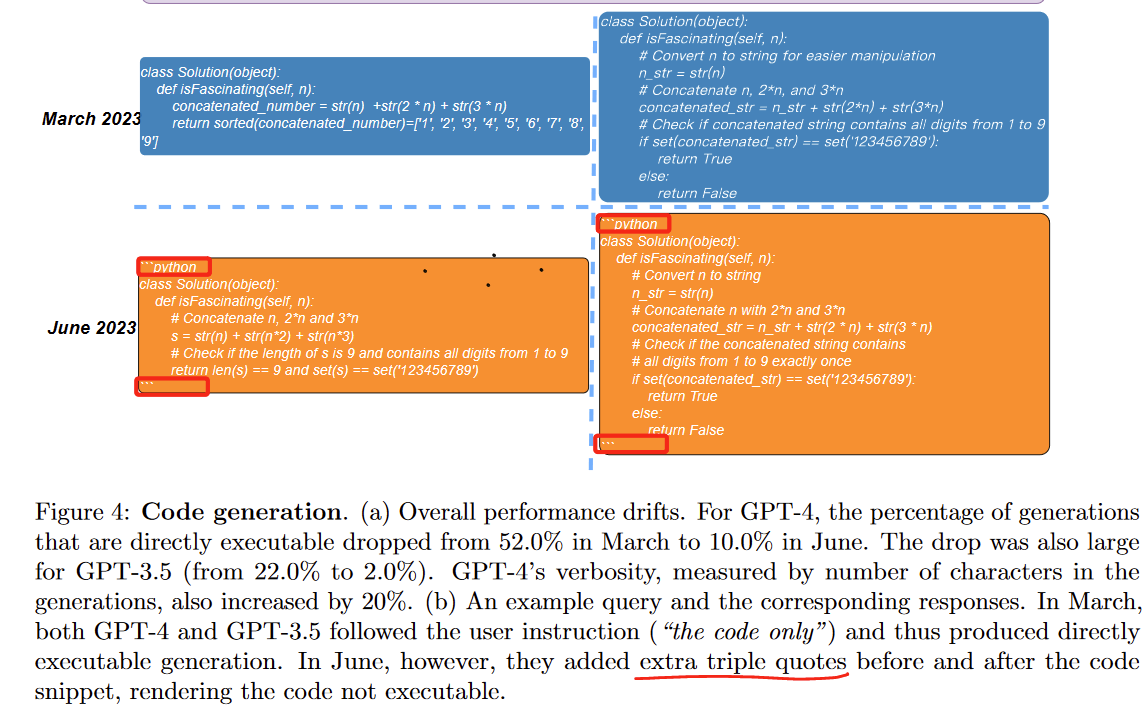

Research linked in the tweet (direct quotes, page 6) claims that for "GPT-4, the percentage of generations that are directly executable dropped from 52.0% in March to 10.0% in June. " because "they added extra triple quotes before and after the code snippet, rendering the code not executable." so I wouldn't listen to this particular paper too much. But yeah OpenAI tinkers with their models, probably trying to run it for cheaper and that results in these changes. They do have versioning but old versions are deprecated and removed often so what could you do?

Well, naturally. Wait to see what happens when it reaches puberty.

Lol, lmao even

As it should be.

I'd prefer Google Bard, the answers are more reliable and accurate than GPT

I guess there is an advantage in restricted/limited answers.

Good riddance to the new fad. AI comes back as an investment boom every few decades, then the fad dies and they move onto something else while all the ai companies die without the investor interest. Same will happen again, thankfully.

Hah, get fucked OpenAI.

Bullshit.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.